On Governing Frontier AI Models: My Summer Fellowship at the Oxford Martin AI Governance Initiative

On Governing Frontier AI Models: My Summer Fellowship at the Oxford Martin AI Governance Initiative

This past summer, I had the exciting opportunity to pursue research with the Oxford Martin AI Governance Initiative (AIGI), specifically within their Risk Management workstream. I chose this fellowship to build upon my existing experience in AI governance as a Research Assistant at Stanford’s Institute for Human-Centered Artificial Intelligence (HAI) and investigate open questions in frontier model governance. This placement would not have been possible without the generous support of the Freeman Spogli Institute’s Summer Internship Fund as well as the guidance and advice of MIP staff and alumni.

Working at Oxford AIGI immersed me in the rapidly evolving field of AI governance at a critical moment in AI innovation. My role encompassed several key responsibilities: I co-authored two papers, one examining open questions in foundation model governance, and another (currently underway) focused on harmonizing AI benchmarks. I also prepared team meetings, coordinated with research collaborators across institutions, and learned to facilitate the kind of cross-disciplinary dialogue that makes effective AI governance possible.

One of the most exciting aspects of the fellowship was attending the “AI for Good Conference” and the “World Summit on the Information Society” in Geneva, Switzerland, where I tracked diplomatic developments in AI standard setting. Witnessing firsthand how international organizations, governments, and civil society groups navigate the complexities of AI governance gave me invaluable insight into the diplomatic dimensions of technology policy.

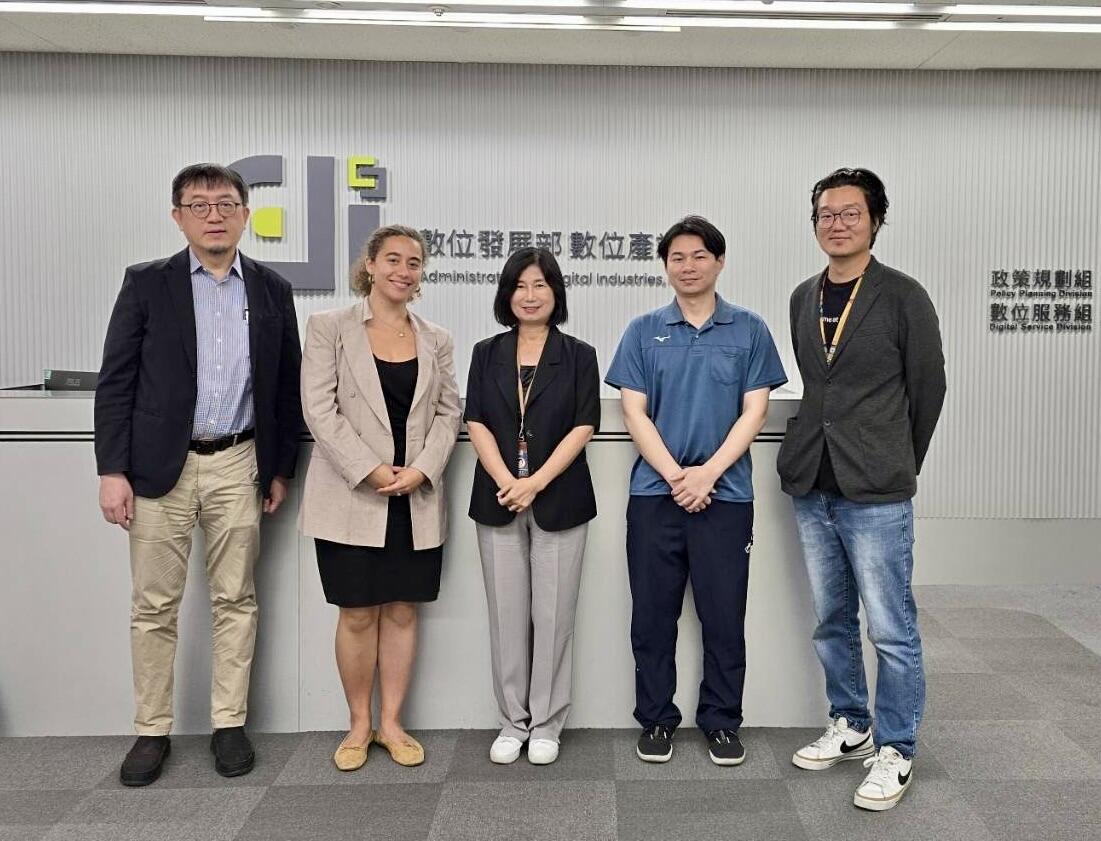

Another exciting moment was interviewing the Deputy Director of Digital Industries of Taiwan’s Ministry of Digital Affairs on Taiwan’s upcoming Basic AI Law, which provided helpful insight in the evolving AI policy context in East Asia for my comparative research on AI benchmarks.

One of my most significant accomplishments during the fellowship was setting up my own research paper and successfully winning collaborators for the project. This experience taught me the importance of clearly articulating research questions and building coalitions around shared interests in the AI governance space. The collaborative nature of the work at AIGI demonstrated how effective governance requires bringing together diverse perspectives and expertise. Working in cross-functional teams that included policy experts, legal scholars, and technical specialists was fascinating. I learned that meaningful AI governance cannot emerge from any single discipline in isolation but requires dialogue, which became particularly important during the review process of our paper.

The fellowship significantly broadened my perspective on the AI ecosystem. At AIGI, I was embedded in an environment focused on safety and long-term risk assessment, which offered a different lens from my previous experiences at Stanford that focused more on industry deployment and Trust and Safety. Interacting with policy experts advising the UKAISI and the EU AI Office also proved crucial for researching AI governance across jurisdictions, particularly when the questions I investigated overlapped with existing legal frameworks such as on whistleblower protections for employees of AI companies.

This summer fellowship proved invaluable for clarifying my career interests and identifying potential paths forward. My growing interest in AI governance and international standard setting has been sharpened through this experience, helping me plan for my second year at MIP and my upcoming job search.

Beyond the formal aspects of the fellowship, one of the most rewarding elements was getting to know the Oxford and London AI safety community. The collaboration with the helpful team at LISA (London Initiative for Safe AI), who housed me for most of the summer and provided opportunities to network via a career fair and evening talks on different aspects of technical AI safety, was particularly enriching and helped me ground my work in broader AI safety debates.

This fellowship reinforced my belief that effective AI governance requires sustained collaboration between technical experts, policymakers, legal scholars, and civil society representatives. As AI systems become more capable and their societal impact grows, the need for thoughtful, evidence-based governance frameworks becomes ever more critical.