When AI Algorithms Decide Whether Your Insurance Will Cover Your Care

When AI Algorithms Decide Whether Your Insurance Will Cover Your Care

In this Health Affairs study, Stanford researchers examine the promises of efficiency and risks of supercharged flaws in the race to use artificial intelligence in health care.

Health insurers are rapidly turning to artificial intelligence to evaluate requests for coverage of medical procedures, drugs, and other services. While AI has many potential benefits, concerns have been raised about the lack of human review in those decisions.

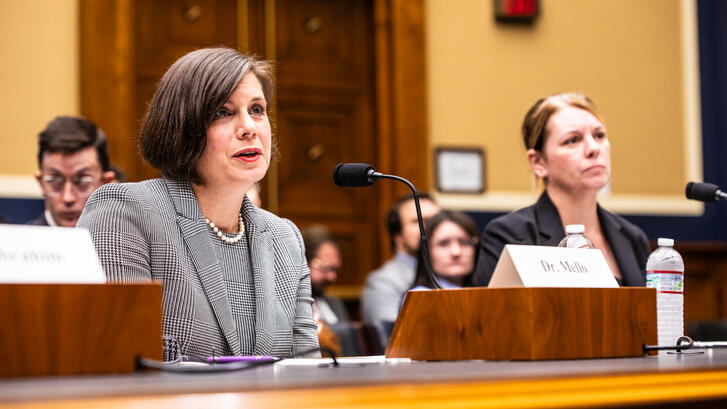

In an article published in Health Affairs, Michelle Mello, JD, PhD, and three Stanford colleagues write that with the unstoppable rise of AI in health care, patients and policymakers are increasingly concerned about who’s overseeing these tools. They worry insurance staff don’t understand how AI works, and that opaque decision-making could lead to AI making mistakes or causing unfair outcomes.

“Several cracks have emerged in the vision of a well-functioning, AI-driven insurance ecosystem,” writes Mello, a professor of health policy in the Department of Health Policy in the School of Medicine and of law at Stanford Law School. “A major worry is that wrongful denials may be occurring as a result of a lack of meaningful human review of recommendations made by AI.”

Physicians and patients alike have long reviled the demand for prior authorization from insurance companies before medical treatment, as the practice often leads to delays and wrongful denials of coverage. And throwing AI into this mix without adequate oversight could make things even worse by supercharging the flaws of the existing process.

The researchers are quick to add, however, that AI could also help mend the broken system if used wisely. They offer recommendations on how to harness the benefits of AI while keeping potential risks in check.

The research team describes several concerns about insurers’ use of AI:

- Human reviewers at insurance companies may lack the time, expertise, and incentives to be effective reviewers of recommendations made by AI.

- The opacity of AI algorithms makes it hard to know why a particular determination was made, which in turn makes it hard to challenge the determination.

- AI tools may not consider important information bearing on the patient’s need for the service—for example, tools assessing when a patient can safely be discharged from a rehab hospital rarely have data about the patient’s social supports at home.

- Algorithms trained on insurers’ past coverage decisions will lock in flawed aspects of those decisions.

- Many insurers don’t have robust governance processes through which they could monitor the accuracy and potential biases of the AI tools they have adopted.

Sizing Up the Ethics of AI Use

The other co-authors of the Health Affairs study are Artem A. Trotsyuk, PhD, a postdoctoral scholar in the Stanford University Department of Genetics; Abdoul Jalil Djiberou Mahamadou, PhD, MSc, a postdoctoral fellow the Stanford Center for Biomedical Ethics; and Danton Char, PhD, MD, a pediatric cardiac anesthesiologist, clinical researcher and empirical bioethics researcher in Stanford’s Department of Anesthesia.

All are members of the Stanford Healthcare Ethical Assessment Lab for AI, a team working to identify and address ethical issues arising from the use of health-care AI applications.

“Drawing on empirical work on AI use and our own ethical assessments of provider-facing tools as part of the AI governance process at Stanford Health Care, we examine why utilization review has attracted so much AI innovation and why it is challenging to ensure responsible use of AI,” Mello and her colleagues write in the Health Affairs study.

They note the uptake of AI tools by insurance companies has been dramatic. A 2024 survey by the National Association of Insurance Commissioners of 93 large health insurers in 16 states found that 84% were using AI for some operational purposes.

Potential Benefits

It’s understandable that health insurers are turning to algorithms in utilization review, which is the processes through which they verify the appropriateness of coverage requests. Physicians and other health care providers, too, are increasingly using AI to prepare insurance coverage requests and appeal denials. The prior authorization process in particular is costly, time-consuming, and a major source of provider burnout and care delays.

Even before AI, studies showed high denial rates for prior authorization requests and even higher reversal rates on appeal, including an 82% overturn rate in Medicare Advantage plans, write Mello and her colleagues. Processing insurance claims for services already provided has similar problems: Automation is unavoidable given the volume, but both older algorithms and human reviewers make frequent errors. Many denials that should be approved never are, in part because confusing explanation letters discourage appeals.

“AI could address these problems in three ways,” the researchers write. “First, there are opportunities to fully automate prior authorization and claims approvals. Despite concern about denials, most requests are approved.”

They note that Medicare Advantage plans approved more than 93 percent of prior authorization requests from 2019 to 2023. Many of the tasks involved in evaluating insurance requests are well suited to AI, such as checking coverage rules or extracting basic patient data from the medical record. Automating approval of clearly allowable requests could reduce delays and stress while freeing human reviewers at insurance companies to focus on complex cases.

Secondly, AI could significantly reduce denials caused by incomplete or unclear submissions. Because insurers lack direct access to patients’ medical records, they rely on summaries often prepared by non-clinical staff at doctors’ offices and hospitals. AI tools can help these individuals pull relevant clinical data, guide them on medical necessity, link to supporting documents, and check completeness. Although generative AI can produce errors, improving models and safeguards make these uses increasingly feasible.

Finally, AI could lower barriers to appealing denials, helping correct wrongful decisions and discouraging inappropriate ones. Predictive tools already can flag denials most likely to be overturned, while generative AI can draft appeals by synthesizing relevant clinical information from the medical record. AI could also make Explanation of Benefits letters easier to understand for patients and help insurers meet CMS requirements to provide specific reasons for denials.

The Unknowns

Unfortunately, data are not publicly available to help patients and researchers understand whether using AI produces better or worse outcomes in insurance processes.

“Insurers complain that they are wrongfully vilified but haven’t shared the information that would validate their claims that AI is benefiting their clients,” Mello said. “Unfortunately, it seems that many insurers don’t have strong governance processes in place to make sure these problems don’t arise.”

The researchers note that while AI holds great promise for improving health care, two-thirds of adults in the United States have little trust that it will be used responsibly. And consumers rate health insurers among the least trusted sectors of the health care system.

“It is no surprise, then, that health insurers’ use of AI has sparked controversy and litigation,” they write.